New Paper Accepted: Trusting Data Updates to Drone-Based Model Evolution

Our paper entitled Trusting Data Updates to Drone-Based Model Evolution has been accepted for publication at the workshop GENZERO 2024.

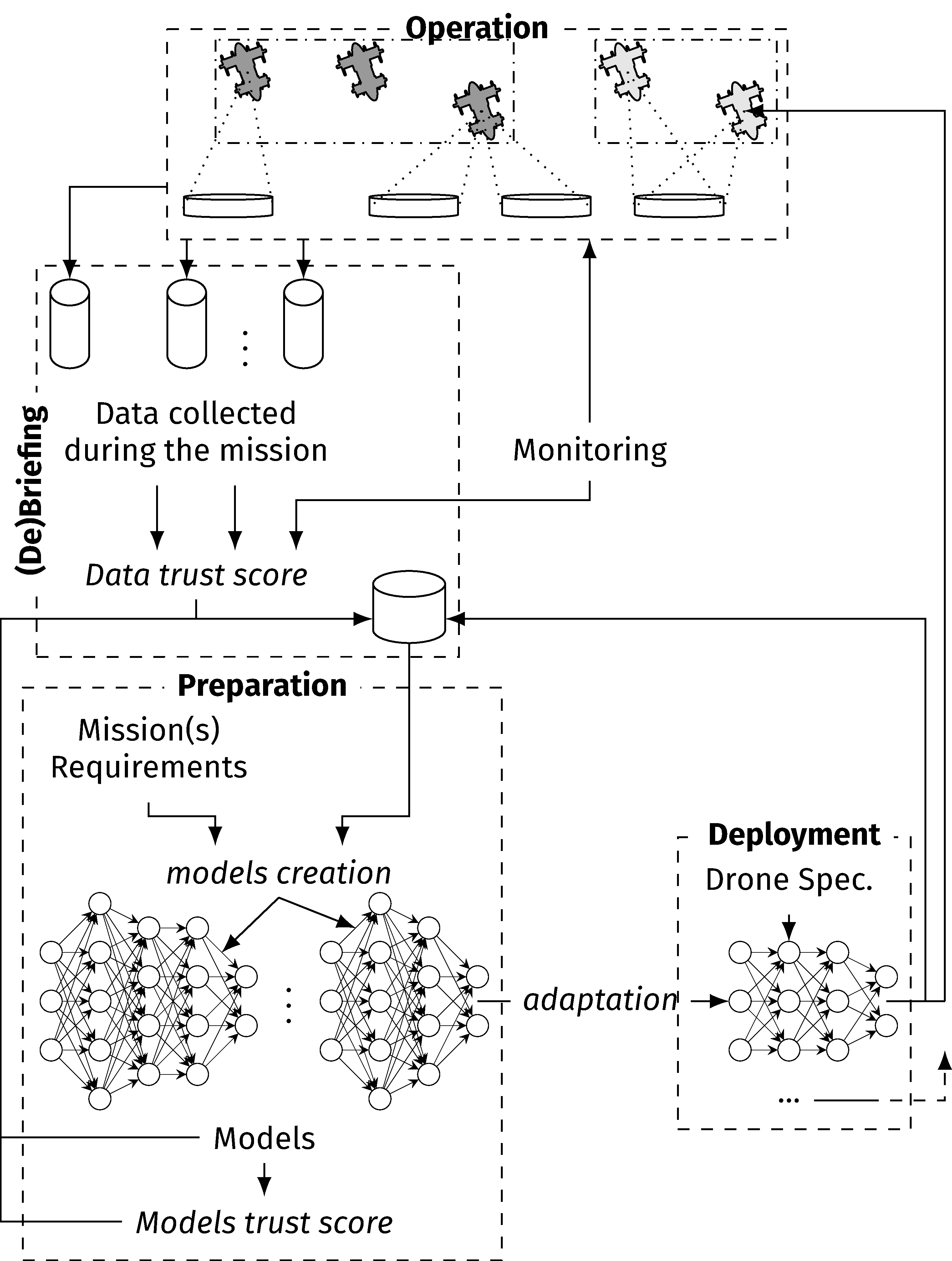

In this paper, we set the vision for trustworthy AI in federated scenarios, particularly in the context of collaborative drone swarms application. The following picture gives an overview of our approach.

This work is a collaboration between our group at SESAR Lab, Università degli Studi di Milano and C2PS (Centre for Cyber-Physical Systems), Khalifa University, Abu Dhabi, UAE.

The authors of the paper are: Marco Anisetti, Claudio A. Ardagna, Nicola Bena (me), Ernesto Damiani, Chan Yeob Yeun, and Sangyoung Yoon.

Below is the full abstract.

AI is revolutionizing our society promising unmatched efficiency and effectiveness in numerous tasks. It is already exhibiting remarkable performance in several fields, from smartphones’ cameras to smart grids, from finance to medicine, to name but a few. Given the increasing reliance of applications, services, and infrastructures on AI models, it is fundamental to protect these models from malicious adversaries. On the one hand, AI models are black boxes whose behavior is unclear and depends on training data. On the other hand, an adversary can render an AI model unusable with just a few specially crafted inputs, driving the model’s predictions according to her desires. This threat is especially relevant to collaborative protocols for AI models training and inference. These protocols may involve participants whose trustworthiness is uncertain, raising concerns about insider attacks to data, parameters, and models. These attacks ultimately endanger humans, as AI models power smart services in real life (AI-based IoT). A key need emerges: ensuring that AI models and, more generally, AI-based systems trained and operating in a low-trust environment can guarantee a given set of non-functional requirements, including cybersecurity-related ones. Our paper targets this need, focusing on collaborative drone swarm missions in hostile environments. We propose a methodology that supports trustworthy data circulation and AI training among different, possibly untrusted, organizations involved in collaborative drone swarm missions. This methodology aims to strengthen collaborative training, possibly built on incremental and federated learning.

The paper is available here (open access).