New Paper Accepted: Continuous Management of Machine Learning-Based Application Behavior

Our paper entitled Continuous Management of Machine Learning-Based Application Behavior has been accepted for publication in the journal IEEE Transactions on Services Computing.

In this paper, we extend the methodology first proposed in E. Damiani, C. A. Ardagna, “Certified Machine-Learning Models”, in Proc. of SOFSEM 2020, Limassol, Cyprus, January 2020 and M. Anisetti, C. A. Ardagna, E. Damiani, P. G. Panero, “A Methodology for Non-Functional Property Evaluation of Machine Learning Models”, in Proc. of MEDES 2020, Virtual, November 2020 to manage the behavior of ML-based applications at run time.

In this paper, we face this hot research topic from a different perspective. Our goal is to ensure that the behavior of the ML-based application with respect to a given non-functional property remains stable over time. Existing approaches either

- focus on a specific non-functional property (e.g., fairness) and ensure that the ML model supports the property over time, as new data points arrives for inference (or training, depending on the setting)

- focus on functional management and ensure that the ML model/application has high accuracy (or other quality metrics) over time, as new data points arrives for inference (or training, depending on the setting)

Our approach

- takes as input a quantitative, measurable definition of a non-functional property (e.g., variance for fairness)

- relies on a set of trained ML models

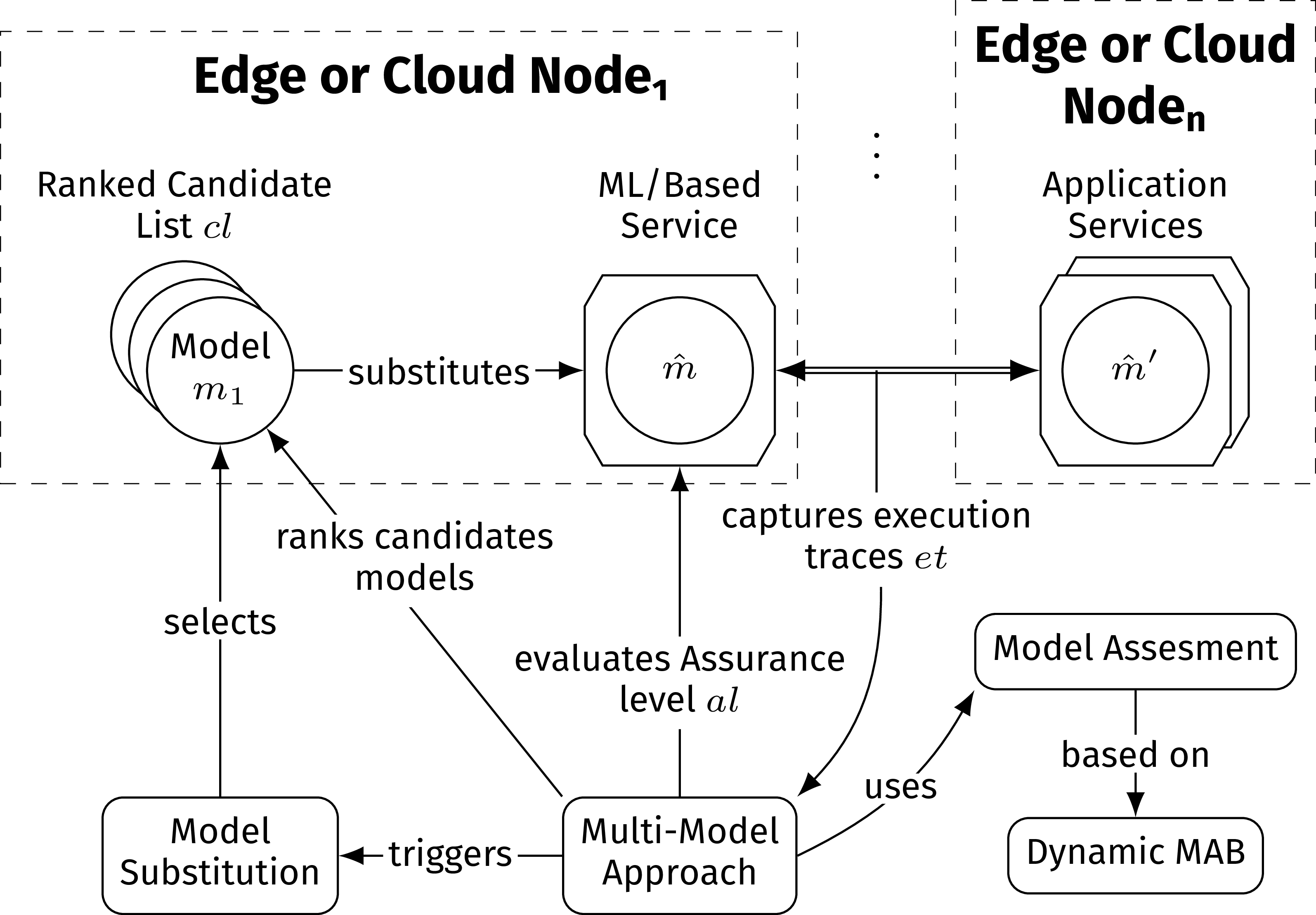

- selects one ML model to be used for inference, and continuously monitors the model: when it degrades in terms of the non-functional property, it is substituted with another ML model in the pool.

Model monitoring and substitution is based on a Dynamic Multi-Armed Bandit (Dynamic MAB). Let us start from a traditional MAB-based approach. It evaluates the ML models in terms of the non-functional property and retrieves the winning model: the winner is the one used by the application, substituting the previous one when its (non-functional) quality degrades. In our Dynamic MAB approach, ML models are evaluated in windows of execution traces (i.e., inference-time data points and the corresponding predictions). The Dynamic MAB automatically computes window termination, that is, when its decision is statistically significant. In addition, the Dynamic MAB maintains a tunable memory of past executions, to avoid re-instantiating the MAB at every window.

To summarize, the Dynamic MAB evaluates the ML models on the given execution traces in dynamically sized windows. At the end of each window, the winner model is put in production substituting the preceding one and ensuring stable non-functional behavior.

What happens when the currently selected model is severely degrading but the window is not over yet? We introduce the notion of early substitution to solve this issue, fixing a substitution threshold for early substitution.

The following picture summarizes our approach.

The authors of the paper are: Marco Anisetti, Claudio A. Ardagna, Nicola Bena (me), Ernesto Damiani, and Paolo G. Panero.

Below is the full abstract.

Modern applications are increasingly driven by Machine Learning (ML) models and their non-deterministic behavior is affecting the entire application life cycle from design to operation. The pervasive adoption of ML is urgently calling for approaches that guarantee a stable non-functional behavior of ML-based applications over time and across model changes. To this aim, non-functional properties of ML models, such as privacy, confidentiality, fairness, and explainability, must be monitored, verified, and maintained. This need is even more pressing when modern applications operate in the cloud-edge continuum, increasing their complexity and dynamicity. Existing approaches mostly focus on i) implementing classifier selection solutions according to the functional behavior of ML models, ii) finding new algorithmic solutions to this need, such as continuous re-training. In this paper, we propose a multi-model approach built on dynamic classifier selection, where multiple ML models showing similar non-functional properties are available to the application and one model is selected at time according to (dynamic and unpredictable) contextual changes. Our solution goes beyond the state of the art by providing an architectural and methodological approach that continuously guarantees a stable non-functional behavior of ML-based applications, is applicable to any ML models, and is driven by non-functional properties assessed on the models themselves. It consists of a two-step process working during application operation, where model assessment verifies non-functional properties of ML models trained and selected at development time, and model substitution guarantees a continuous and stable support of non-functional properties. We experimentally evaluate our solution in a real-world scenario focusing on non-functional property fairness.