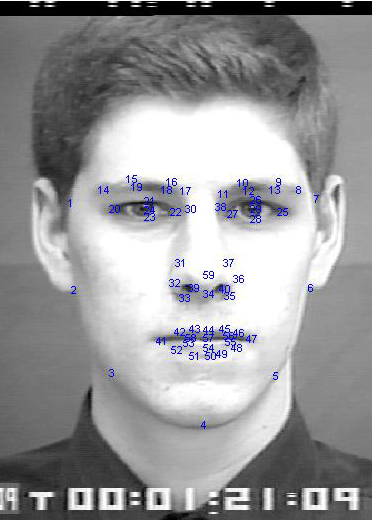

Manual annotations of facial fiducial points on the Cohn Kanade database

The following ground truth coordinates of 59 facial fiducial points have been manually annotated by Ludovica Regianini, one collaborator of the LAIV (Laboratory for the Analisys of Images and Vision).NB. There is no quantitative analysis of the consistency of the annotations.

Specifications

Each annotation file is a plain text ASCII file bearing the same name of the corresponding image file but with extension ".txt", and containing a list of pixel coordinates arranged in rows:P1x P1y

P2x P2y

... ...

P59x P59y

where P1, P2, ..., P59 are exemplified in figure:

the coordinates are in scientific (exponential) notation. The x and y coordinates are separated by blanks. Some annotation files may contain some empty rows at the end which should not be taken into account.

NB. In the adopted reference system, the coordinates of the first pixel (at the image upper left corner) are (1,1).

Terms of use:

The following manual annotations can be used only under the conditions specified in the file license.txt attached to the distribution.LAIV annotations:

- Cohn

Kanade database(1): download

annotations

8795 video frames annotated: the original database contains 487 video sequences depicting one or more of the six basic expressions as deliberately acted by 97 subjects. The sequences have variable length but they all start with neutral expression.

In this distribution, the annotations are arranged per subject and then per expression (A=anger, F=fear, D=disgust, G=sadness, H=happyness, S=surprise).

More precisely, the original database comes with manual annotations of the Facial Action Units from which the basic facial expressions can be inferred; the annotations of the facial fiducial points are complementary to those and are useful for training (both static and dynamic) models for the study of basic facial expressions.

(1)Kanade, Cohn, Tian. Comprehensive database for facial expression analysis. Proceedings of the 4th IEEE International Conference on Automatic Face and Gesture Recognition, 2000.

Back to home page