IGER for Rehabilitation. Inside the

EC funded project, REWIRE, coordinated

by us, we have developed a novel Game Engine that combines classical gaming functionalities with advanced features, based on computational intelligence, that are required to support rehabilitation at home. These are: real-time monitoring of the correctness of execution of the exercises (video), adaptation and configuration of the games to the patient status (video), feed-back to the patient through a virtual therapist that accompanies the patient throughout the rehabiltiation (video) and support for different devices. In particular, monitoring feed-back is given through coloring the patients' avatar itself to make monitoring feed-back easily distinguishable. Adaptation through a Bayesian framework has been developed that takes into account a-priori judgement of the therpaist as well as analysis of performance data in real-time. Different forms of feed-back can be proposed like mascots, smilings or a virtual therapist. Finally Natural User Interfaces through gestures or voice recognition have been implemented.

IGER is meant to work inside a Patient Station, aimed at guiding patients

to rehabilitation at home, that is tightly connected to a Hospital Station

where the clinicians guide the rehabilitation. For this reason a middleware,

called IDRA (Input Device for Rehabilitation Assistance) has been implemented

that allows plugging several devices, using the most adequate for a particular

exercise.

A set of mini-games have developed on IGER to support posture and balance rehabiltiation. The games are briefly described in these videos:

- Animal hurdler to guide raising leg exercises.

- FireFighter to guide stepping in all directions.

- Fruit catcher either to shift weight laterally or to step laterally.

- Gathering fruit to step in all directions.

- Hay collect to shift the weight laterally.

-

Animal hurdler to guide sit to stand movements.

- Pump the wheel to guide raising legs movements.

- Scare crow to guide standing still.

- Shape follower to guide arms movements.

- Baloon popper to guide upper arm movement or neglect rehabilitation.

- Baloon popper with silhouette tracking.

- Animal feeder to train dual task: balancing while moving arms.

Some of these games will be played tracking the COP through a balance board, a few using a haptic device to track and perturb the hand. All games use Kinect for monitoring, NUI and whole body tracking.

The FITREHAB subproject (http://www.innovation4welfare.eu/fitrehab - http://fitrehab.dsi.unimi.it) was financed by the Strucural Funds of the EC and it was coordinated by us. It has integrated and field tested a new and innovative virtual reality based rehabilitation and training platform, which will allow patients and medical dis-charged people to perform physical exercise at home under remote expert planning and monitoring. The main objective was to perform a field test of such platform to assess its clinical efficacy, deployment procedure within the existing infrastructure of hospitals, and benefits for the national health systems in terms of costs and performance. The proposed platform consists of two integrated stations. The first one will be used at hospitals by the medical staff to schedule a customized program of exercises to each pa-tient. The second station will be used by the patient at home to do fitness with a virtual environment as a trainer. The movements of the patient will be tracked by several sen-sors attached to a smart shirt, and an avatar will mimic those movements in the virtual en-vironment. The patient will be prompted to follow a plan of exercises. Besides movement sensors, biological ones will also be attached to the shirt, in order to monitor heart rate, temperature and breathing effort. All this information will be sent back to the station in the hospital, where the medical staff will review it and adapt the scheduled program if needed. In this way, the patient is always under supervision and the treatment can be ad-justed and customized to fit the specific needs of each patient.

Duck Neglect. In collaboration with Niguarda Hospital in

Milano, we have realized a system for rehabilitation of patients with

neglect that is based on our platform for rehabilitation. The platform

is under test with patients. Some videos on first patients are shown here

(video1, video2,

video3).

Particular care has been taken in designing the rehabilitation exercises,

which follows neuropsychological rehabilitation rules. In this pilot,

we developed a visual search task, in which the subject is instructed

to hit with the hand one or more objects belonging to a certain class

(targets) and to avoid distractors. The system tracks the hands motion

and logs the collisions with both true objects and distractors. The trajectories

are also logged. Both objects and distractors are balanced between the

left and right side, and the upper and lower regions. We used natural

backgrounds and objects that can be experienced in everyday life, such

as cans on the shelves of a house kitchen. The exercises progress in terms

of difficulty (number of cues, visual and auditory) and complexity (number

of targets to be hit).

The validation of this platform could lead to its diffusion as an at-home

technology, breaking down the usual costs of VR treatments. All the components

can be the same but the projection screen and the projector that can be

substituted by a TV-screen. Moreover, the program interface allows its

use also by non qualified personnel, such as the patient's caregiver.

A video can be downloaded from here

(82 Mbyte). A video of part of a patient's session can be downloaded from

here.

|

|

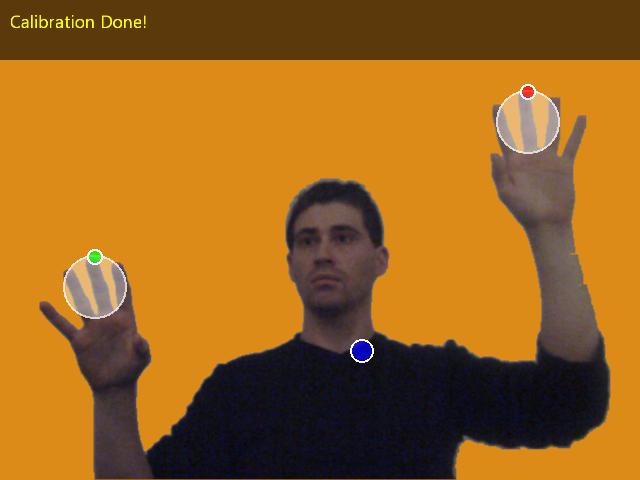

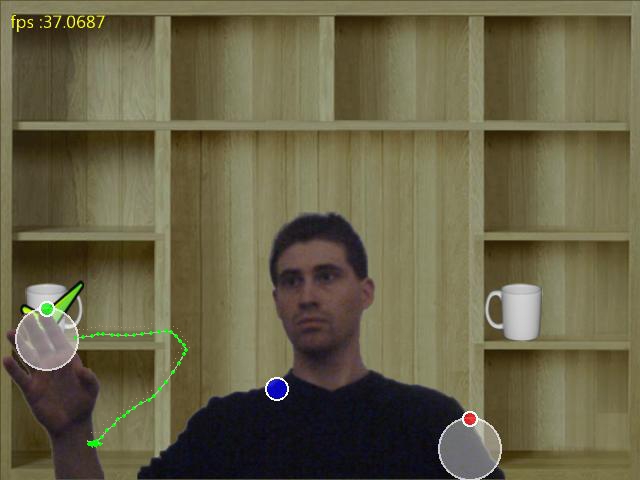

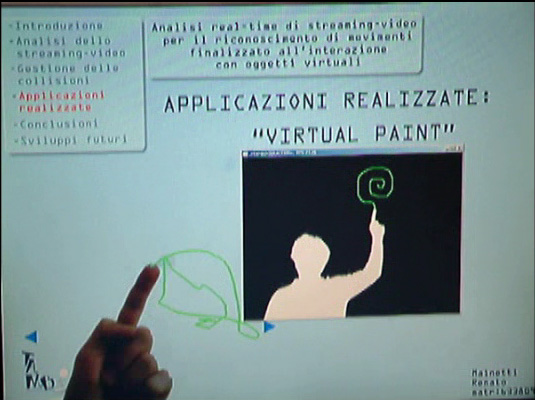

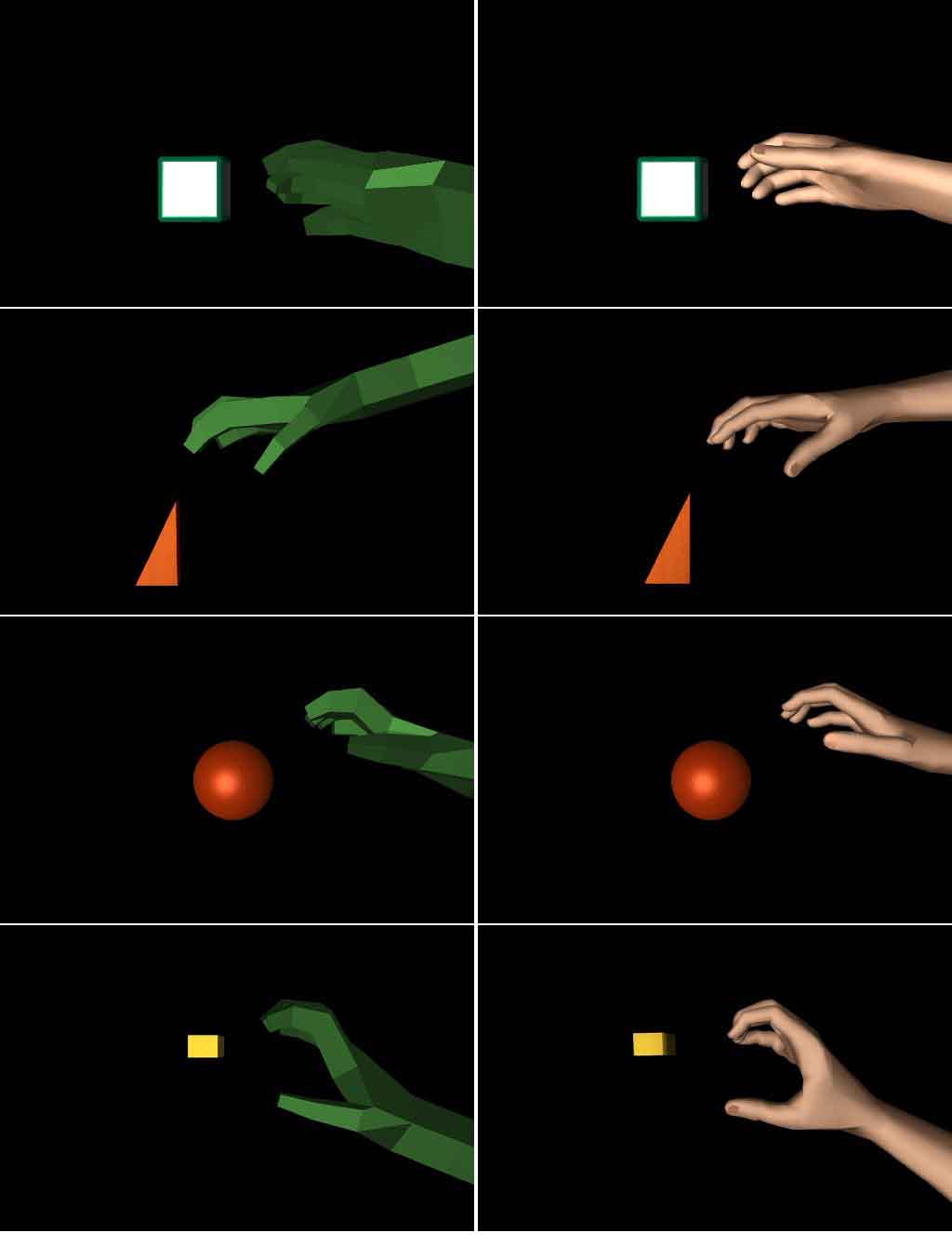

Virtual Rehabilitation. We have realized a videoinstallation for Rehabilitation of patients with motor or neurocognitive deficits. The system is constituted of a WEBcam and a host computer. The patient stands or sits in front of the camera and is free to move. He has a white curtain behind him. He watches a projection screen positioned in front of him at about 1.5m distance. A virtual scene is projected on the screen by a host PC. A web cam, connected to the same host PC, is positioned below the screen aimed at the patient's body.The host receives the video stream and, through a set of optimized algorithms, extracts in real time the patient's silhouette. This is "pasted" over a virtual scenario and displayed in real-time either on the host monitor or on a wall or a large screen through a digital projector. The patient therefore sees himself moving inside such a virtual world. Efficient collision detection alogorithms allow to detect collisions between patient's body parts (e.g. hand) and objects in the virtual world (e.g. buttons). Collisions trigger change in the virtual scenario, like change in music, change in objects shape or change in the entire scence, making the subject feeling an immersive experience. A preliminary video is shown here (3Mbyte). The very limited cost of the system (a WEB cam of 100 Euros + a host PC in its basic version) allows a very wide diffusion of it. Therefore it can become a great help for rehabilitation and monitoring patients, that, otherwise, would need to be hospitalized. A previous video in which virtual paint and moving virtual objects are shown, can be downloaded here (2 Mbyte).

Video installation. This is a low-cost system based on WEB-cam video streaming processing. It is targeted to memory / motor rehabilitation for motor and cognitive deficits. "Questo progetto è realizzato grazie ai fondi della Sovvenzione Globale INGENIO erogati dal Fondo Sociale Europeo, dal Ministero del Lavoro e della Previdenza Sociale e dalla Regione Lombardia". A demo can be seen here.

![]()

![]()

![]()

Video installation. This is a low-cost system based on WEB-cam video streaming processing. It is targeted to memory / motor rehabilitation for motor and cognitive deficits. "Questo progetto è realizzato grazie ai fondi della Sovvenzione Globale INGENIO erogati dal Fondo Sociale Europeo, dal Ministero del Lavoro e della Previdenza Sociale e dalla Regione Lombardia". A demo can be seen here.

![]()

![]()

![]()

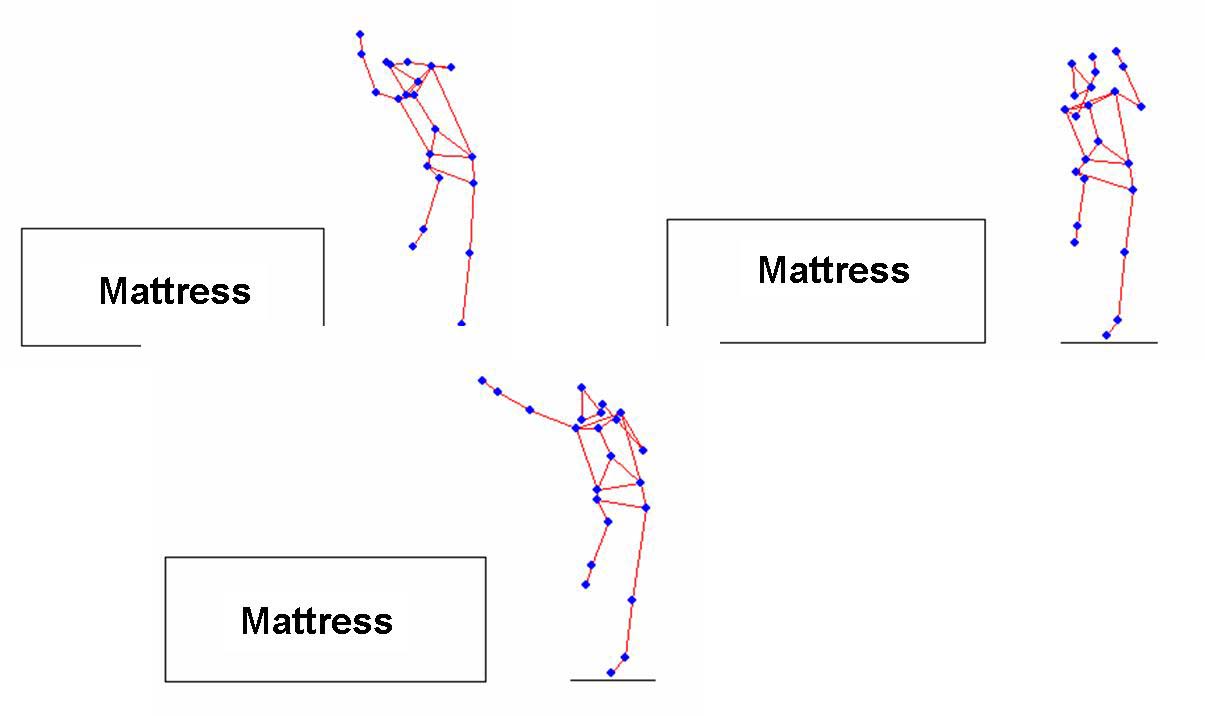

Coordination in sport gestures. We are investigating the capability of coordination of the different body segment when difficult kinematics goals have to be reached. We have recorded the high jump gesture of top class athlets and we are in the process of characterizing the different strategies (styles) of motion: three different styles are shown in the left panels. Here is an example of the reconstructed motion (3.035bytes).More recently we are studying free-climber motion. Preliminary results will be presented at 96 ISBS2006.

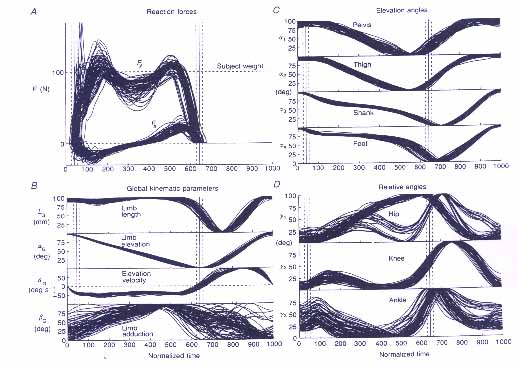

Determinants of Human Motion. We have studied which can be the kinematics patterns which are invariant to different anatomical measures and speed. In gait these invariants take the shape of covariation in the elevation angles. These holds for most of the step duration. however, in correspondence of the most dynamic phases a high variability in the time course of the lower segments is registered. More information is provided in the 1996 J. Physiology and 1997 Archives Italiannes de Biologie papers. Most of this work was published in biological journals.

Here is a preliminary video.